-

Posts

3,529 -

Joined

-

Last visited

Content Type

Profiles

Forums

Gallery

Blogs

Events

Store

Downloads

Posts posted by HackPerception

-

-

@groslala - Yes, there will be some upscaling. This headset gets into 5-10+gbps territory (even higher if supersampling). With the exception of perhaps 5G UWB, there's no wireless protocols that have gotten regulatory approval that can support the insane bandwidth required to drive 5K. We were able to make the original wireless adapter SKU compatible with 3 generations of devices which is pretty awesome - but fully supporting another generation of devices will probably require a SKU refresh because it's not our goal to compress the image to hell like you see with WiFi streaming. A ton of countries haven't even approved 5G and don't have a single 5G tower so that's not a solution OEMs can leverage at scale yet (and if they could, it would be $$$). WiFi6 requires compression.

That said, you'd probably notice far more quality drop much more with a WiFi system like Quest Link / Virtual Desktop than you'd notice with the upscaled WiGig image.

With Pro 2 - we're hitting the limits of copper cabling. We're right on the edge upon which you'd need to switch to a fiber optic tether. I think Varjo charges many hundreds of dollars just for a replacement fiber optic tether.

-

Hello @Nosnik

Things are a little different in HK. Please fill out the following form and someone will contact you directly to arrange for it

https://business.vive.com/hk/enterprise_inquiry/

https://www.vive.com/hk/product/vive-pro2/overview/ -

@Fangh I can confirm that at launch Focus 3 will not support API-access to the cameras due to the privacy concerns for our enterprise clients. This is a topic of active conversation - if the situation changes we'll certainly communicate it loudly.

-

@Fangh- I will ask the WaveSDK team for a first party confirmation about API access to the front-facing cameras. Privacy is a key concern on this HMD due to the clients buying it.

The headset itself does have stereocorrect pass-through on the OS level so you don't have to take off the headset to view the outside world. That said, the camera output within the HMD is similar to Quest 2's - albeit a bit higher resolution and I think a bit faster frame-rate.

-

@Greenbert - You would not be able to do full body tracking with Cosmos without implementing an expensive external tracking system like OptiTrack.

What you're going to want to use is an HMD that uses SteamVR tracking (I.E. Vive Pro) and then use the Vive Trackers. Cosmos can use the trackers if you have the external tracking mod.

Here's an example of motion capture software designed for the Trackers: https://www.enter-reality.it/project-rigel

-

1

1

-

-

As Fox said,

- Wireless Adapter work does with with Pro 2. You need to "Pro Attach kit" which is the kit that contains the special short cable to attach a Pro/Pro 2 to the adapter.

- There will be a software update post launch, which will be needed to achieve higher resolutions. Out of the box, it will upscale.

-

@jsr2k1 . The installer just connects directly to our servers. It's super straightforward with no funny biz so if you're getting network errors there's a good chance it's related to firewall, AV, or port forwarding. Double check that you're allowing the app through Windows Firewall and any other firewall or other apps which may be moderating your internet connection.

Here's the networking info for Viveport @jsr2k1 - it's a super standard HTTP/HTTPS setup:

The Viveport installer and software make outgoing connections to our servers’ TCP port 80 (HTTP) and TCP port 443 (HTTPS). DNS name resolution to the DNS service on the client side utilizes UDP port 53 & TCP port 53. These are the wildcard domains that are currently used (changes over time): *.htc.com *.htcsense.com *.htcvive.com *.vive.com *.viveport.com, *.*.viveport.com & viveport.com *.htccomm.com.cn *.v1v3.cc *.vive.link & vive.link -

-

@kosmonova - Sasvane is right; Origin is technically only supported on Cosmos. If you were motivated, you could find the Origin.exe binary in the Vive installation folders and launch it manually - it works with most SteamVR controllers but the current iteration was intended primarily for Cosmos.

If you have Cosmos connected when your in that Console window, that check box should be available for you to enable as long as Origin hasn't been manually modified or destroyed. -

@bio998 If using SteamVR SDK, you call IVRSystem::ResetSeatedZeroPose. You may have to go into your project settings and ensure your tracking universe is set to seated to ensure the transform behaves as properly - how you do this step depends on your Unity version but in general you go to Window > SteamVR Input > Advanced Settings > SteamVR Settings > "Tracking Space Origin" [drop-down menu], "Tracking Universe Seated".

-

@Yousef I don't understand what the conflict is in this conflict. Is the problem the 4 player limit for Vive Wireless adapter or are you having a larger issue with the tracking basestations interfering with one another?

If you're using 2.0 basestations, set them up every 4-5 meters apart from one another and blanket the entire space creating as much overlap. Run the automated channel configuration tool to eliminate channel conflicts. Let me know if this is the case and I can post example diagrams.

If you're using 1.0 basestations - that's a tough situation to be in because each pair of stations needs to be optically isolated from one another. I can also find example posts where I talk about this scenario.

-

@GARBAGEPLAY - For over a year now, we've partnered with iFixIt to sell some replacement components for the most common problems that can be self-repaired. That said, there are many parts such as the camera in this scenario which are put into the headset as entire assemblies. Some parts are more replaceable than others and require various levels of disassembly. Many internal parts require a clean room otherwise you get dust in the headset.

https://blog.vive.com/us/2021/04/26/vive-ifixit-repair-partner/

-

@darkfyrealgoma - Are you saying the exposure looks the same for you after changing to the 1.0.14.3 beta?

-

Is this this Vive console giving you this error or SteamVR? Was it working before and then suddenly stopped?

-

@bauer224 It looks like the USB port on the back is the only one that has the Displayport 1.2 requirement. If any port will support it, it will be this specific port. Check with MSI to see if that is wired to the Nvidia GPU. If they say it is, try the adapter I linked.

-

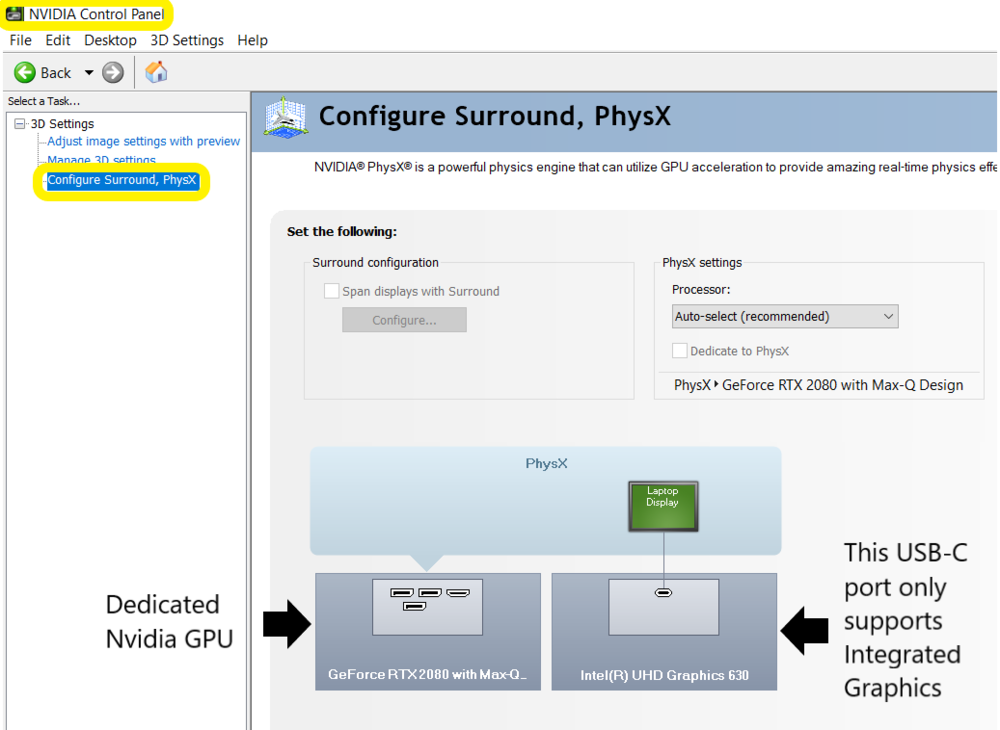

@bauer224 - USB-C is extra tricky because OEMs can integrate them a number of different ways. Sometimes they don't wire their USB-c port to the graphics card in order to save money.

Overall:

-

The UBS-C port on the laptop must be physically wired to the dedicated GPU and not just the integrated graphics.

- The port must specifically support Displayport 1.2+ signaling.

-

Support for this varies widely and is highly model specific. Some laptop models don't wire the USB-C port to the GPU as a cost savings measure.

-

- You may need to contact your manufacturer to get confirmation of how your laptop is wired up

- You can get a rough check of your port mapping via the PhysX page of the Nvidia. If your port is supported, it should show up as a full sized Displayport Icon under your GPu

-

- I can't speak to your specific adapter without more info. Not all adapters/cables work. The key requirements are that it must support 4K @ 60Hz, must support Display 1.2+, and must be able to transmit ~20Gbps of bandwidth.

- Here is a UBS-C to Displayport Cable that's known to work with all current Vive Desktop HMDs: Club 3D CAC-1507

-

If your laptop has a Minidisplay port, this is our recommended mDP -> mDP cable: https://www.amazon.com/dp/B0777RKTJB/ref=twister_B077GCMQXJ.

- This is our recommended cable since it's known to work and is under $10.

-

The UBS-C port on the laptop must be physically wired to the dedicated GPU and not just the integrated graphics.

-

@JMS3DPrinting SteamVR has a specific relationship with the IMU because the basestations can only output data at ~120hz interleaved (behavior is more complex with 2.0 stations). The IMU data is required for sensor fusion because the base stations provide updates at ~1/10 of the speed the IMU can so the IMU plays a huge role in micro-adjustments and interpolating for when there aren't enough optical samples for a pose. A strong g-shock really confuses the sensor fusion and it can take a few frames of optical data for the tracking system's to get enough optical samples to create a pose estimate that makes sense for both what the optical sensors are seeing and what the IMU is reporting.

It isn't that this is a Vive-specific thing. It's more of a low level SteamVR tracking thing and past a certain depth, SteamVR tracking is a blackbox to everybody outside of Valve. You'd hit a similar issue with other SteamVR hardware, but the specifics will probably vary a little. Similarly, optical tracking systems have similar limitations (with the additional limitations that the tracking cameras operate at less than 120hz). Every tracking system will have a different way to try and smooth out all of the uncertainty from when the the tracking system updates slower than the device is moving through physical space. SteamVR uses less of the predictive modeling that Oculus - alot of Oculus tracking is filtered through machine learning and isn't representative of the real sensor values.

When dealing with high-G force or high-speed tracking scenarios, using a high-speed imaging system like Optitrack may be the only option in many high-performance use cases.@JMS3DPrinting It's not a case of the IMU not being robust - it's a case of the other tracking components (basestations) working at a fraction of the refresh speed of the IMU. The IMU can output data that's has such a high delta that the sensor fusion can create a valid pose estimate and it needs to hit enough optical samples that it can properly place itself again.

First generation Vive trackers had a low pass filter firmware option that could provide some level of buffer to help smooth out G-spikes but that same approach is not possible with newer SteamVR tracking implementations due to changes in the SteamVR hardware stack.

If you have a situation where you're regularly getting over 8g or move the controllers faster than SteamVR can track - you're really in the realm of Optitrack and other high-demand solutions. -

@joellipenta - While work does continue around wireless, I would see that we've probably seen most of the AMD compatibility improvements that we're going to see with the current SKU at this stage in the product life cycle. The broad incompatibility had to due with how certain motherboards are physically wired. There's a limit to how much we can attempt to hotfix using fireware and software as this is sometimes tied to a specific hardware design that OEMs implement when building out motherboards using certain sockets. Some motherboard models were more receptive to our hotfixes than others based on their design.

-

@AirMouse I tried resetting it from my console. You're account goes back to the old forums and the new forums must be pulling from an old database for this to be happening.

-

To confirm - this is a status LED misreporting issue. The controllers have hardware-level overcharge protection that protects the battery from overcharging. Leaving them plugged in after charging sessions is okay, regardless of what charge state the LED is reporting.

-

1

1

-

-

@black dothole - There's a bit of a language barrier here.

The little black dots on the headset aren't IR filters - they're actually IR windows specifically designed to allow IR to pass through to the sensor that's embedded underneath them.

So three things could be happening:

- Your occluding too many sensors

- The motor and wiring isn't well shielded is and is emitting eletromagentic interference (EMI) which is interfering with the headsets circuitry.

- The motor is generating vibration which is interfering with the IMUs.

It's probably the second one if you're using a decent sized motor.

-

The "it worked fine then suddenly lagged" types of problems are statistically the hardest to solve remotely because whatever is causing the problem is mostly likely specific to your hardware or software environment.

When this happens, I usually do the following:

- Preform a clean install Nvida driver to a previous known good version

- Reinstall SteamVR

- Doublecheck my startup programs to ensure that no new/suspicious tasks are suddenly eating up resources.

Eventually I've found it can be cleaner to reinstall windows then trying to needle in the haystack some compositor errors. Clean installing Windows isn't the most consumer friendly option but it can solve a ton of more stubborn SteamVR problems and save you more time in the long run then trying to troubleshoot it out. I clean install my PC's every 3-6 months.

If you can, it also helps to test your HMD on a different PC to ensure the hardware itself is fine.

-

@Asish Depends on your setup, your engine, etc... Most Unity devs would take their VR camera rig and then script against it to create variables they can then apply conversions on to get an output of an quaternon, a euler angle, or a rotation matrix. It really depends on what your project looks like and how much error is allowable since quaternon or Euler angles will introduce conversion error, especially Euler conversions.

Note that it's particularly a rabbit hole in Unity that can be headache inducing. Here are some posts which may help lead you down a working approaching.

-

-

@larix007 - I generated a support ticket for you.

If you encounter this issue - the only way to get it resolved is by generating a support ticket using this page.

What can commonly happen here is that your account gets tied to the country that your IP address resolves to during registration. We can only change your country setting in the backend because it affects alot of financial stuff like how taxation works. -

@zra123 You can if you're using the optically tracked Cosmos and the optically tracked controllers. Pass through unfortunately isn't currently supported on the Cosmos Elite/External Tracking Faceplate due to a deep and hard to resolve conflict with SteamVR (it's complicated...)

Vive Pro 2 Discount

in VIVE Console Software

Posted

I'm working a number of teams to look into the root cause of this and will respond back to this thread with further info as soon as I have it.

@testgrounds @Kikette @Dre64 @Fox is Vibin @VirtualSteve